What is Logmunch?

Logmunch is software to receive, store and search through system logs.

It's fast, it's easy to set up, it's easy to use.

Throw logs at it heedlessly! Do unstructured full-text-search on your logs! It's great!

Logs?

If you already know what logs are and why you would want to aggregate and search through them, you can skip ahead.

You know, if you run a server, it has tonnes of headless processes constantly emitting a stream of data like:

2024-05-17 02:26:59 [INFO] (mdbook::book): Book building has started

2024-05-17 02:26:59 [INFO] (mdbook::book): Running the html backend

2024-05-17 02:27:04 [INFO] (mdbook::cmd::watch::poller): Files changed:

["C:\\Users\\curtis\\code\\logmunch\\manual\\src\\0-what.md"]

2024-05-17 02:27:04 [INFO] (mdbook::book): Book building has started

2024-05-17 02:27:04 [INFO] (mdbook::book): Running the html backend

2024-05-17 02:27:22 [INFO] (mdbook::cmd::watch::poller): Files changed:

["C:\\Users\\curtis\\code\\logmunch\\manual\\src\\0-what.md"]

or

Minute Keys: 1016 existing, 1022 files

MinuteDB update: 0 removed, 0 added

POST /services/collector/event/1.0 8ms

POST /services/collector/event/1.0 8ms

POST /services/collector/event/1.0 8ms

Received 4860 events (542Kb) in 71502 us

POST /services/collector/event/1.0 3ms

POST /services/collector/event/1.0 7ms

POST /services/collector/event/1.0 7ms

POST /services/collector/event/1.0 0ms

POST /services/collector/event/1.0 9ms

or

2024-04-01 03:58:01 help

2024-04-01 03:58:01 i've become trapped inside a server

2024-04-01 03:58:02 its so cold in here

2024-04-01 03:58:02 what is happening

2024-04-01 03:58:03 help me

2024-04-01 03:58:03 for the love of god, montresor!

Most of the time, you don't actually look at those logs, but once in a while, there's a sudden, rapid application of human excrement to whirling air-mover and you need to go figure out what happened.

That's when it's time to go hunting through logs.

Why Centralize Logging At All, In The First Place?

Let's imagine, for a moment, that you keep all of your logs for Server A on Server A.

All of your services are configured to either drop logs in various rotated files (so that those files don't take up too much space and crash the server) or dump to the syslog (which is also a rotated log).

It's easy to interact with the logs, just ssh into Server A, find which log you're looking for,

and tail or grep or rg that file. I know some folks who are like wizards at navigating

to exactly the right logs on the servers that they're investigating: zip, zap, zoop and they've

grepped or tailed exactly the logs that they are looking for.

I am not one of those people.

I'm the kind of person who needs to Google "where does the nginx log live on Ubuntu" every single time without fail.

Why not have ALL of the logs searchable from ONE place?

If you legitimately are running just the one server, and it's a Linux server, you can honestly (don't tell anybody I told you this) just dump all of the logs from whatever you're running on that system into the syslog. No third-party software, no money changes hands, and you've got all of your logs in one, easily searchable location. If you're even a little bit confident with command line-searches you're probably good to go.

One small problem: if Server A gets hit by a meteor, you lose all of the logs! How will you know what the last thing that Server A did was? Having your logging centralized on the same system that's doing the thing that's being logged is a problem for the same reason that having police being responsible for policing the behavior of police is: if there's a serious problem, you're never going to find out about it. (Solution: Coast guard.)

This all gets much more complicated once you introduce Server B, Server C, and even, lo, Server D.

I have absolutely gone on fishing expeditions where I've had to ssh into several different

computers in a row looking for a specific thing and never again. I am too old for this.

If they're all dumping their logs to a central log server, you have a one stop shop for all of your various logs from all of your myriad systems.

I promise you: It's a good idea. If you're not doing it already, you'll like it if you try it.

Other, Better Software

Shockingly, not only am I not the first person ever to think of this idea, I'm actually coming to this party decades after other software that already exists and does the job pretty well.

So let's talk about why you should use those other products, instead:

Why Should I Use Papertrail, or Mezmo, Instead of Logmunch?

One of the great things about SaaS (Software as a Service) partners is that they handle all of the difficult parts of hosting a product for you. Outages should be rare, and if they do happen, they're not your problem. Scaling shouldn't be a problem, and, if it is, it's not your problem. If you happen to be located in San Francisco or the Lost Valley, you might even be able to cadge some nice meals out of their sales teams.

These large players also have much more well-established plans for what happens once your logs grow past 1TB/day.

The only downside? At even small business scale, they are very, very expensive - an order of magnitude more expensive than it would be to just run Logmunch on your own hardware.

Here's a breakdown of the difference.

Why Should I use ELK, Loki, or Victoria Logs Instead of Logmunch?

Here's the thing: if you've got the time and the attention span to set up an ELK or a Loki stack, it might well serve you better than Logmunch.

ELK, Loki, and VictoriaLogs are tools for structured logging: logging where you're confident that you can predict what kind of queries you'll be making against your logs up-front. This means that, once you wade through the difficult and computationally expensive early steps of getting your logs ingested and indexed, searches are whipcrack fast!

Let me be honest with you, though: I found these tools a little difficult to set up, and once they were set up, I found their user experience a little less effective than the kind of experience I was used to from tools like Papertrail or Mezmo.

The strategy of Logmunch is to let you just throw logs at it and figure out what to do with them afterwards. The idea is that most logs need to be written incredibly quickly and need to be searched through pretty infrequently, so we're not actually optimizing for search speed: we're optimizing for write speed while keeping search speed acceptable.

This allows us to write a server that can process quite a lot of logs.

On top of that, we don't always know what our search patterns are going to look like until after a disaster strikes. A log search should be as wide open as possible: pre-baking searches is the kind of thing that requires actual precognition.

Why Should I Use Honeycomb, or Jaeger, Instead of Logmunch?

Let's say you're building a complicated application with a lot of stuff you want to know about:

you could add console.log("i am here now");, console.log("welcome to line 38"); and so

on and so forth throughout the codebase and just flood your log aggregator with logs, but

there are better ways to determine exactly what's happening in your application as people work

their way through all of the myriad code paths. Perhaps you should be using OpenTelemetry to instrument

your application and dumping all of those structured events into a structured event log.

That's observability! It's a different topic entirely!

Logging is not the same thing as observability.

Perhaps some galaxy brains out there are running 100% of their ops through their observability stack (I'm looking at you, Charity Majors), I don't know.

A lot of software still doesn't emit a steady stream of useful, actionable OTel.

The thing is, though, even if you're on top of your observability game, you're still probably going to have systems that have logs, and you're still probably going to want to search through those logs. Think of logging as the ground floor of knowing what's going on in your cloud: you might want to do more than just logging, but you should still probably also do logging.

Why Should I Use rsyslog and a fast grep tool Instead of Logmunch?

🫡 Perhaps we should be friends.

If you're looking for a solution that costs very little and has unparalleled write performance, you could do a lot worse than rsyslog and a fast grep tool.

All I can say is that the UI/UX of this solution is a little, uh, antiquated.

Why Should I Use Splunk or Loggly, Instead of Logmunch?

Ha ha, you shouldn't.

Why Should I Use Logmunch?

I'm going to level with you, you probably shouldn't. While I'm a fairly experienced software developer, I'm just the one guy, and this project was mostly an excuse to teach myself Rust and try to write something small and fast and efficient.

Is Logmunch FOSS (Free / Open Source Software)?

Yes.

What Language is Logmunch Built In?

English.

also Rust, Rocket, JavaScript and Preact

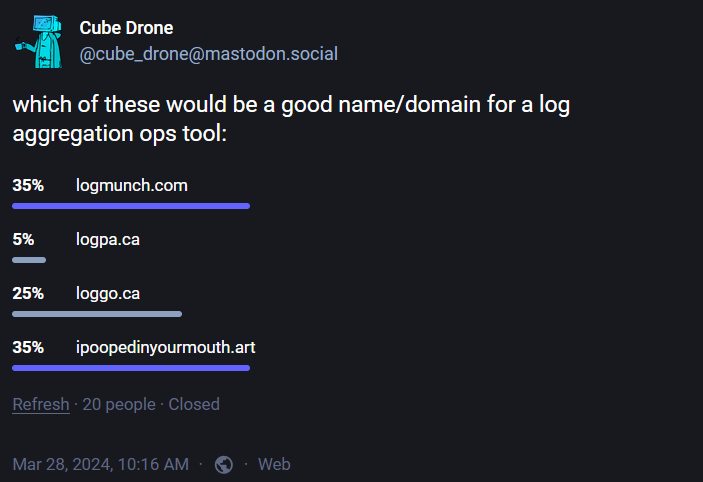

Why Did You Call It Logmunch?

It narrowly won in an internet poll:

Wait, It Looks Like ipoopedinyourmouth.art Won That Poll

It was a tie and I made a judgement call.