What is Logmunch?

Logmunch is software to receive, store and search through system logs.

It's fast, it's easy to set up, it's easy to use.

Throw logs at it heedlessly! Do unstructured full-text-search on your logs! It's great!

Logs?

If you already know what logs are and why you would want to aggregate and search through them, you can skip ahead.

You know, if you run a server, it has tonnes of headless processes constantly emitting a stream of data like:

2024-05-17 02:26:59 [INFO] (mdbook::book): Book building has started

2024-05-17 02:26:59 [INFO] (mdbook::book): Running the html backend

2024-05-17 02:27:04 [INFO] (mdbook::cmd::watch::poller): Files changed:

["C:\\Users\\curtis\\code\\logmunch\\manual\\src\\0-what.md"]

2024-05-17 02:27:04 [INFO] (mdbook::book): Book building has started

2024-05-17 02:27:04 [INFO] (mdbook::book): Running the html backend

2024-05-17 02:27:22 [INFO] (mdbook::cmd::watch::poller): Files changed:

["C:\\Users\\curtis\\code\\logmunch\\manual\\src\\0-what.md"]

or

Minute Keys: 1016 existing, 1022 files

MinuteDB update: 0 removed, 0 added

POST /services/collector/event/1.0 8ms

POST /services/collector/event/1.0 8ms

POST /services/collector/event/1.0 8ms

Received 4860 events (542Kb) in 71502 us

POST /services/collector/event/1.0 3ms

POST /services/collector/event/1.0 7ms

POST /services/collector/event/1.0 7ms

POST /services/collector/event/1.0 0ms

POST /services/collector/event/1.0 9ms

or

2024-04-01 03:58:01 help

2024-04-01 03:58:01 i've become trapped inside a server

2024-04-01 03:58:02 its so cold in here

2024-04-01 03:58:02 what is happening

2024-04-01 03:58:03 help me

2024-04-01 03:58:03 for the love of god, montresor!

Most of the time, you don't actually look at those logs, but once in a while, there's a sudden, rapid application of human excrement to whirling air-mover and you need to go figure out what happened.

That's when it's time to go hunting through logs.

Why Centralize Logging At All, In The First Place?

Let's imagine, for a moment, that you keep all of your logs for Server A on Server A.

All of your services are configured to either drop logs in various rotated files (so that those files don't take up too much space and crash the server) or dump to the syslog (which is also a rotated log).

It's easy to interact with the logs, just ssh into Server A, find which log you're looking for,

and tail or grep or rg that file. I know some folks who are like wizards at navigating

to exactly the right logs on the servers that they're investigating: zip, zap, zoop and they've

grepped or tailed exactly the logs that they are looking for.

I am not one of those people.

I'm the kind of person who needs to Google "where does the nginx log live on Ubuntu" every single time without fail.

Why not have ALL of the logs searchable from ONE place?

If you legitimately are running just the one server, and it's a Linux server, you can honestly (don't tell anybody I told you this) just dump all of the logs from whatever you're running on that system into the syslog. No third-party software, no money changes hands, and you've got all of your logs in one, easily searchable location. If you're even a little bit confident with command line-searches you're probably good to go.

One small problem: if Server A gets hit by a meteor, you lose all of the logs! How will you know what the last thing that Server A did was? Having your logging centralized on the same system that's doing the thing that's being logged is a problem for the same reason that having police being responsible for policing the behavior of police is: if there's a serious problem, you're never going to find out about it. (Solution: Coast guard.)

This all gets much more complicated once you introduce Server B, Server C, and even, lo, Server D.

I have absolutely gone on fishing expeditions where I've had to ssh into several different

computers in a row looking for a specific thing and never again. I am too old for this.

If they're all dumping their logs to a central log server, you have a one stop shop for all of your various logs from all of your myriad systems.

I promise you: It's a good idea. If you're not doing it already, you'll like it if you try it.

Other, Better Software

Shockingly, not only am I not the first person ever to think of this idea, I'm actually coming to this party decades after other software that already exists and does the job pretty well.

So let's talk about why you should use those other products, instead:

Why Should I Use Papertrail, or Mezmo, Instead of Logmunch?

One of the great things about SaaS (Software as a Service) partners is that they handle all of the difficult parts of hosting a product for you. Outages should be rare, and if they do happen, they're not your problem. Scaling shouldn't be a problem, and, if it is, it's not your problem. If you happen to be located in San Francisco or the Lost Valley, you might even be able to cadge some nice meals out of their sales teams.

These large players also have much more well-established plans for what happens once your logs grow past 1TB/day.

The only downside? At even small business scale, they are very, very expensive - an order of magnitude more expensive than it would be to just run Logmunch on your own hardware.

Here's a breakdown of the difference.

Why Should I use ELK, Loki, or Victoria Logs Instead of Logmunch?

Here's the thing: if you've got the time and the attention span to set up an ELK or a Loki stack, it might well serve you better than Logmunch.

ELK, Loki, and VictoriaLogs are tools for structured logging: logging where you're confident that you can predict what kind of queries you'll be making against your logs up-front. This means that, once you wade through the difficult and computationally expensive early steps of getting your logs ingested and indexed, searches are whipcrack fast!

Let me be honest with you, though: I found these tools a little difficult to set up, and once they were set up, I found their user experience a little less effective than the kind of experience I was used to from tools like Papertrail or Mezmo.

The strategy of Logmunch is to let you just throw logs at it and figure out what to do with them afterwards. The idea is that most logs need to be written incredibly quickly and need to be searched through pretty infrequently, so we're not actually optimizing for search speed: we're optimizing for write speed while keeping search speed acceptable.

This allows us to write a server that can process quite a lot of logs.

On top of that, we don't always know what our search patterns are going to look like until after a disaster strikes. A log search should be as wide open as possible: pre-baking searches is the kind of thing that requires actual precognition.

Why Should I Use Honeycomb, or Jaeger, Instead of Logmunch?

Let's say you're building a complicated application with a lot of stuff you want to know about:

you could add console.log("i am here now");, console.log("welcome to line 38"); and so

on and so forth throughout the codebase and just flood your log aggregator with logs, but

there are better ways to determine exactly what's happening in your application as people work

their way through all of the myriad code paths. Perhaps you should be using OpenTelemetry to instrument

your application and dumping all of those structured events into a structured event log.

That's observability! It's a different topic entirely!

Logging is not the same thing as observability.

Perhaps some galaxy brains out there are running 100% of their ops through their observability stack (I'm looking at you, Charity Majors), I don't know.

A lot of software still doesn't emit a steady stream of useful, actionable OTel.

The thing is, though, even if you're on top of your observability game, you're still probably going to have systems that have logs, and you're still probably going to want to search through those logs. Think of logging as the ground floor of knowing what's going on in your cloud: you might want to do more than just logging, but you should still probably also do logging.

Why Should I Use rsyslog and a fast grep tool Instead of Logmunch?

🫡 Perhaps we should be friends.

If you're looking for a solution that costs very little and has unparalleled write performance, you could do a lot worse than rsyslog and a fast grep tool.

All I can say is that the UI/UX of this solution is a little, uh, antiquated.

Why Should I Use Splunk or Loggly, Instead of Logmunch?

Ha ha, you shouldn't.

Why Should I Use Logmunch?

I'm going to level with you, you probably shouldn't. While I'm a fairly experienced software developer, I'm just the one guy, and this project was mostly an excuse to teach myself Rust and try to write something small and fast and efficient.

Is Logmunch FOSS (Free / Open Source Software)?

Yes.

What Language is Logmunch Built In?

English.

also Rust, Rocket, JavaScript and Preact

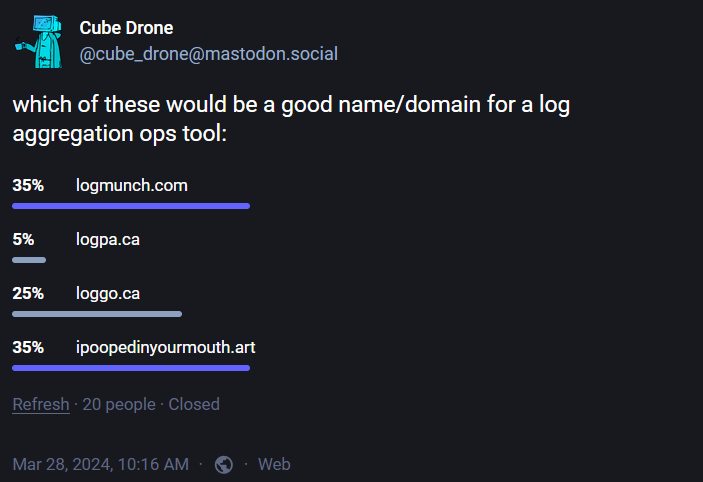

Why Did You Call It Logmunch?

It narrowly won in an internet poll:

Wait, It Looks Like ipoopedinyourmouth.art Won That Poll

It was a tie and I made a judgement call.

Install

The first step in using Logmunch is to install it, somewhere.

You're Going To Need a Secret Key

The rest of this process isn't going to work unless you have a secret key. It's necessary for authentication in logmunch to work.

Generate it like this:

$> openssl rand -base64 32

This will generate a value that looks like:

XVH72iQN0WETdbWJG+bglNoW/eo/8tCTa7iesC6f16w=

Save the value for later!

Docker

On any computer already running docker, you can get started with a simple command:

$> docker run -d \

--name=logmunch \

--restart=always \

--volume=/tmp/data:/tmp/data

--p 1234:1234

--env "LOGMUNCH_DEFAULT_PASSWORD=hunter2" \

--env "ROCKET_SECRET_KEY=XVH72iQN0WETdbWJG+bglNoW/eo/8tCTa7iesC6f16w=" \

--env "ROCKET_PORT=1234" \

--env "ROCKET_ADDRESS=0.0.0.0" \

--env "LOGMUNCH_WRITE_TOKEN=ZoSPvJcP46xUTSJxmXz69B..." \

--env "LOGMUNCH_RAM_GB=7.8" \

--env "LOGMUNCH_DISK_GB=150" \

--env "LOGMUNCH_DATA_DIRECTORY=/tmp/data" \

--env "LOGMUNCH_MAX_WRITE_THREADS=8" \

logmunch\logmunch_x86

Wait, though! All of these environment values are set wrong, you should read more about the Arguments, below!

But What If I'm Running on ARM, not X86?

Oho, there's a trick to that:

$> docker run -d \

--name=logmunch \

--restart=always \

--volume=/tmp/data:/tmp/data

--p 1234:1234

--env "LOGMUNCH_DEFAULT_PASSWORD=hunter2" \

--env "ROCKET_SECRET_KEY=XVH72iQN0WETdbWJG+bglNoW/eo/8tCTa7iesC6f16w=" \

--env "ROCKET_PORT=1234" \

--env "ROCKET_ADDRESS=0.0.0.0" \

--env "LOGMUNCH_WRITE_TOKEN=ZoSPvJcP46xUTSJxmXz69B..." \

--env "LOGMUNCH_RAM_GB=7.8" \

--env "LOGMUNCH_DISK_GB=150" \

--env "LOGMUNCH_DATA_DIRECTORY=/tmp/data" \

--env "LOGMUNCH_MAX_WRITE_THREADS=8" \

logmunch\logmunch_arm

That's right, logmunch\logmunch_arm instead of logmunch\logmunch_x86.

Linux

Logmunch is available in both logmunch_linux_x86 and logmunch_linux_arm, depending on what you want to ... run it on.

$> curl https://logmunch.com/versions/latest/logmunch_linux_x86 > logmunch

(this creates a local binary called logmunch that you can execute)

$> chmod a+x logmunch

(this makes that binary executable)

$> LOGMUNCH_DEFAULT_PASSWORD=hunter2 \

ROCKET_SECRET_KEY=XVH72iQN0WETdbWJG+bglNoW/eo/8tCTa7iesC6f16w= \

ROCKET_PORT=1234 \

ROCKET_ADDRESS=0.0.0.0 \

LOGMUNCH_WRITE_TOKEN=ZoSPvJcP46xUTSJxmXz69B..." \

LOGMUNCH_RAM_GB=7.8 \

LOGMUNCH_DISK_GB=150 \

LOGMUNCH_DATA_DIRECTORY=/tmp/data \

LOGMUNCH_MAX_WRITE_THREADS=8" ./logmunch

Logmunch should start running! Wow!

Unfortunately: as soon as you shut down the window, Logmunch will go down with it. In order to keep Logmunch running, you need to engage the services of some kind of supervisor. If you're running Ubuntu, you could, for example, use systemd.

systemd

Let's imagine you're root on a freshly created Ubuntu box.

We've placed the logmunch binary at /root/logmunch, and run chmod a+x /root/logmunch to make it executable.

Create /root/logenv:

LOGMUNCH_DEFAULT_PASSWORD=hunter2

ROCKET_SECRET_KEY=XVH72iQN0WETdbWJG+bglNoW/eo/8tCTa7iesC6f16w=

ROCKET_PORT=80

ROCKET_ADDRESS=0.0.0.0

LOGMUNCH_WRITE_TOKEN=ZoSPvJcP46xUTSJxmXz69B

LOGMUNCH_RAM_GB=1.8

LOGMUNCH_DISK_GB=18

LOGMUNCH_DATA_DIRECTORY=/tmp/data

LOGMUNCH_MAX_WRITE_THREADS=2

(making sure, of course, to replace the environment variables with sensible values)

Create /etc/systemd/system/logmunch.service:

[Unit]

Description=Logmunch

After=network.target

StartLimitIntervalSec=0

[Service]

Type=simple

Restart=always

RestartSec=3

User=root

EnvironmentFile=/root/logenv

ExecStart=/root/logmunch

[Install]

WantedBy=multi-user.target

Now, run systemctl start logmunch to kick off logmunch.

systemctl status logmunch and journalctl -u logmunch.service -n 100 should demonstrate that logmunch has successfully booted.

Once that's working, systemctl enable logmunch will ensure that logmunch starts on boot.

Kubernetes

idk man, ¯\_(ツ)_/¯

Arguments

At a minimum you should be setting a LOGMUNCH_DEFAULT_PASSWORD, tossing some high-quality garbage into the ROCKET_SECRET_KEY

and LOGMUNCH_WRITE_TOKEN, and figuring out your RAM, Disk, and CPU numbers for LOGMUNCH_RAM_GB and LOGMUNCH_DISK_GB and LOGMUNCH_MAX_WRITE_THREADS.

To be honest, you'll probably need to set a lot of these guys manually.

LOGMUNCH_DEFAULT_PASSWORD: (default:admin) - the password to log in and ... see all the logs. For the love of god, set this to something.ROCKET_SECRET_KEY: (default:None) - generate a key with ` and put it in here. Why?ROCKET_PORT: (default:8000) - the port that Logmunch is going to run onROCKET_ADDRESS: (default:127.0.0.1) - the IP address to serve Logmunch from. Set to0.0.0.0if you want to serve all comers.LOGMUNCH_WRITE_TOKEN: (default: null) - this is an authorization token that must be sent with all logs, or your Logmunch server will automatically discard those logs. You'll need to remember this when you're building a system to send logs to Logmunch, later on.LOGMUNCH_RAM_GB: (default:1.8) - how many gigabytes of RAM are set aside for Logmunch. (Remember: leave a little for the underlying OS!)LOGMUNCH_DISK_GB: (default:18) - how many gigabytes of disk space are set aside for Logmunch. (Remember: leave a little for the underlying OS!)LOGMUNCH_DATA_DIRECTORY: (default:./data/) - where the logs are kept. (The/tmp/logs?)LOGMUNCH_MAX_WRITE_THREADS: (default:2) - how many threads can devote themselves to writing logs. (If you have a computer with 4VCPUs, set this to 4?)LOGMUNCH_LICENSE_KEY: (default: null) - set this to a valid license key to indicate that you've purchased Logmunch, for money.ROCKET_ENV: (default:production) - leave this set to "production"

example:

(note: this license key looks valid, but it's not a real license key)

$> LOGMUNCH_DEFAULT_PASSWORD=hunter2 \

ROCKET_SECRET_KEY=NQ81myfpRf9YIiWZjAuUtO9OQx4iu3ByEiyNFSHKU2SioTv3JeOZUyZPWzSeNysP \

ROCKET_PORT=1234 \

ROCKET_ADDRESS=0.0.0.0 \

LOGMUNCH_WRITE_TOKEN=ZoSPvJcP46xUTSJxmXz69Bi1L4OkBNr3DPChF1B4XdgNI6DMi7AL8NrcN3geDLXr \

LOGMUNCH_RAM_GB=7.8 \

LOGMUNCH_DISK_GB=150 \

LOGMUNCH_DATA_DIRECTORY=/tmp/logmunch

LOGMUNCH_MAX_WRITE_THREADS=8 \

LOGMUNCH_LICENSE_KEY=logmunchabunchacruncha-018fa82f-700d-790c-a9c2-45fb6bc60c07-----yIKqtUSmdV4TEQanSrRi/a8ksuVQR4+vphJHoRcozAfFlOgx3md5C+ZNi9xCX1vWYIqF8Hw7WncLD7Nbmx6rDw== \

logmunch

Careful About That Password

The password test is not meaningfully rate limited, so if you're running a production system, pick a long, unique stream of random data. Maybe generate one of those random openSSL strings like you did with the secret key, and store that in a password manager.

Don't Keep Important Data in /tmp

All of the examples here use /tmp/data as the example storage location for data.

Thing about /tmp is that the data in there is often cleared on system reboot. Which you probably don't want?

Maybe create a directory somewhere else.

I've Installed It: Now What?

Open up a browser and visit logmunch at yourserver.tld:1234!

You should be able to log in with the LOGMUNCH_DEFAULT_PASSWORD that you set, at which point you're ... looking at... no logs!

It's time to point some logs at Logmunch!

Send Logs to Logmunch

For all of these examples,

let's imagine that you are running your Logmunch server at http://example.com:8888,

and that you've set your LOGMUNCH_WRITE_TOKEN to aaaabbbbccccddddeeee

The Splunk HEC

Logmunch supports the Splunk "HTTP Event Collector" format, so any of Splunk's open-source log collection tools should just immediately work with Logmunch.

Which is good, do I look like I want to write a bunch of log collectors?

From Docker

Directly from the Command Line

Let's imagine that you're running the application-that-generates-a-bunch-of-logs container.

Here are the necessary arguments to point an arbitrary docker container's logs at your logmunch server:

docker run -d \

--log-driver=splunk \

--log-opt splunk-token=aaaabbbbccccddddeeee \

--log-opt splunk-url=http://example.com:8888 \

--log-opt splunk-format=raw \

application-that-generates-a-bunch-of-logs

For The Entire Docker Instance

In /etc/docker/daemon.json:

{

"log-driver": "splunk",

"log-opts": {

"splunk-token": "aaaabbbbccccddddeeee",

"splunk-url": "http://example.com:8888"

}

}

From node.js

Use this: https://github.com/splunk/splunk-javascript-logging .

Directly from Syslog:

I'm going to level with you: I haven't 100% worked out how to do this, yet.

Surely I can use this tool, somehow, but it's ... it's much too complicated and fussy. I'm going to find a better solution for this, at some point.

From Kubernetes

idk man, ¯\_(ツ)_/¯

Batteries Not Included

There are lots of features that Logmunch doesn't have!

Dump Logs to S3

Lots of log providers give you the opportunity to periodically bundle up and export your logs to a S3 bucket!

This is a great way to:

- Spend a lot of money on S3.

- For logs that you will never, ever look at.

- I've been working at a company where we have been socking away 1 full year's worth of logs in the S3 archives for the past 9 years, and I can count on zero fingers the number of times we've wanted to go gallivanting around in a bunch of .tar.gz archives bundled together by hour looking for something. The needle to haystack ratio there is just insane.

Are you the kind of person who dutifully boxes up leftovers, only to put them in the fridge and let them sit for a month, so that you can throw them out at the end of the month? You're exactly the sort of person who needs to archive logs in S3 for a very long time.

Really, Any Kind of Backup At All

Most of Logmunch's config lives in its environment variables - so, if you can boot up a fresh Logmunch, you're back in the game.

Worst case scenario? You lose some logs. Logs are ephemeral.

Hot Backups/Read Scaling

I think it might be possible to use ngx_http_mirror_module to send all of your logs to a bank of identical Logmunch servers, but I haven't tried it.

Cluster Mode/Write Scaling

Eventually - eventually - you will reach the point where you simply produce more logs than can be written to a single server.

I want to say that Logmunch has probably got your back for quite a long time before this becomes the case, but it's going to happen eventually.

Building a sharding write engine to randomly send logs to a bank of Logmunch servers would scale up those writes would be fairly trivial, but the difficult part comes when you want to search those logs: your search machinery needs to be able to query multiple servers and merge the results.

I know how I would build this, but, uh - it's a big project. So Logmunch can't do it.

Graphing Search Results

It can be really, really useful to see a chart of how many log hits you're receiving over time.

It is unfortunate that I have not built that.

Operational Stats & Graphs

RAM usage? Sisk usage? The amount of logs-per-second that Logmunch is processing? Some indication that Logmunch is under stress if you're sending it too many logs? These would be wonderful things to know.

Unfortunately, Logmunch doesn't surface any of these valuable details!

Lots of Log Ingest Formats

The more ways that Logmunch can accept logs, the better.

As of right now, it just accepts the splunk HEC format and nothing else.

Alerts

It makes a lot of sense that you might want alerts to fire when certain, particularly terrifying or interesting logs are discovered.

Can't do that with Logmunch, though. No alerting infrastructure in there.

Logmunch-Term

Hey, it'd be nice to download a little cli, maybe write a .logmunch-config with a key in it, and do stuff like:

#> logmunch --tail "Metadata on mountpoint"

2024-06-04 23:47:40 marquee stderr [2024-06-05 06:47:40] INFO admin/command_metadata Metadata on mountpoint /chill.mp3 changed to "Nobuo Uematsu - Kefka"

2024-06-04 23:48:05 marquee stderr [2024-06-05 06:48:05] INFO admin/command_metadata Metadata on mountpoint /groove.mp3 changed to "Barry "Epoch" Topping - Welcome"

2024-06-04 23:50:18 marquee stderr [2024-06-05 06:50:18] INFO admin/command_metadata Metadata on mountpoint /groove.mp3 changed to "Unknown"

2024-06-04 23:50:19 marquee stderr [2024-06-05 06:50:19] INFO admin/command_metadata Metadata on mountpoint /groove.mp3 changed to "Parov Stelar - Soul Fever Blues (feat. Muddy Waters)"

2024-06-04 23:51:05 marquee stderr [2024-06-05 06:51:05] INFO admin/command_metadata Metadata on mountpoint /chill.mp3 changed to "Homestuck - Walk-Stab-Walk (R&E)"

2024-06-04 23:57:06 marquee stderr [2024-06-05 06:57:06] INFO admin/command_metadata Metadata on mountpoint /chill.mp3 changed to "Tycho - See"

2024-06-04 23:58:51 marquee stderr [2024-06-05 06:58:51] INFO admin/command_metadata Metadata on mountpoint /groove.mp3 changed to "Toby Fox - Queen"

2024-06-04 23:59:48 marquee stderr [2024-06-05 06:59:48] INFO admin/command_metadata Metadata on mountpoint /groove.mp3 changed to "PROTO·DOME - THE BEST 2 Minutes 14 Seconds OF YOUR LIFE (Peanut Plains)"

2024-06-05 00:02:02 marquee stderr [2024-06-05 07:02:02] INFO admin/command_metadata Metadata on mountpoint /groove.mp3 changed to "Bonnie Tyler - Total Eclipse Of The Heart"

2024-06-05 00:02:25 marquee stderr [2024-06-05 07:02:25] INFO admin/command_metadata Metadata on mountpoint /chill.mp3 changed to "Homestuck - Clockwork Sorrow"

2024-06-05 00:03:30 marquee stderr [2024-06-05 07:03:30] INFO admin/command_metadata Metadata on mountpoint /chill.mp3 changed to "Mazedude - Mummy Dance (Underground Forest Area)"

2024-06-05 00:07:36 marquee stderr [2024-06-05 07:07:36] INFO admin/command_metadata Metadata on mountpoint /chill.mp3 changed to "Nobuo Uematsu, Aki Kuroda - Suteki Da Ne"

Wow, that seems like a cool thing to be able to do. Can't do it, though.

More Better Timezone UI

The logmunch UI just assumes you're cool with it converting logs from UTC into whatever your browser timezone is. That's... not always the right assumption?

Hide Search Highlighting

I just want to see logs, I don't want to see where the logs match the searches! That's crazy!

Support

Support requests can be enqueued here.

You might need to poke me on social media, I don't actually check very often if there are issues.

Discussions

Join the vibrant and definitely real Logmunch community here.

Frequently Asked Questions

I haven't been asked any questions frequently enough for this page to have valid content, yet.

If you want to fix that, uh, ask me some questions:

infrequently-asked-questions@gooble.email